I asked Claude to build me a customer dashboard last month. Ten minutes later, I had gorgeous React components, a clean API structure, and authentication that worked flawlessly on my laptop. I felt like a wizard.

Two days later, when we put it in front of actual users, everything fell apart. The API crumbled under concurrent requests. The authentication tokens expired in the middle of user sessions. The database queries that felt snappy with my 10 test records took 30 seconds with real data.

The code looked beautiful. It even worked—sort of. But it was a disaster waiting to happen, and I had no one to blame but myself. I’d asked for a construction worker when I needed an architect.

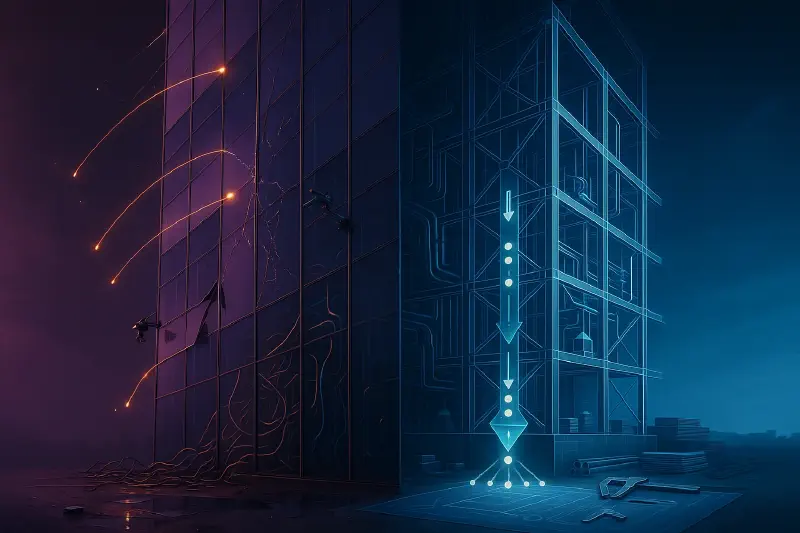

The Skyscraper Nobody Can Live In

Think about building a skyscraper for a moment. You need two completely different types of expertise:

The Architects and Engineers design the building. They figure out where the load-bearing walls go, how to route all the electrical wiring and plumbing, what materials can handle the wind shear at that height, how the building responds to earthquakes. They think about fire escapes and elevator shafts and how people will actually move through the space. They create detailed specifications for everything.

The Construction Workers build it. They know how to mix concrete, how to weld steel beams, how to install windows. Give them a good blueprint with all the specifications—“use this gauge of rebar, space them 6 inches apart, let the concrete cure for 48 hours”—and they’ll build you something solid and safe.

Here’s the interesting part: construction workers understand building principles. They know that rebar makes concrete stronger, that you can’t just drill holes wherever you want in a load-bearing wall, that certain materials expand in heat. They might not be able to calculate the exact compression loads or explain the physics, but they know how to build.

So in theory, if you asked construction workers to build a skyscraper without giving them architectural plans, they could do it. They’d use their knowledge to put up walls and install wiring and plumbing. The building would stand.

For a while.

Then someone tries to actually live in it. Or an earthquake hits. Or there’s a fire. And you realize the electrical system is a tangled mess that can’t handle the load. The plumbing creates massive pressure problems on the upper floors. There are no proper fire exits. The structure can’t flex with the wind. It looks like a building, but it’s not safe, not livable, not reliable. It’s a expensive disaster wrapped in a pretty facade.

You really needed those architects and engineers.

We Accidentally Merged Two Jobs

Now let’s talk about software. In our industry, we have the same two roles—but we’ve gotten confused about which is which.

Software Engineers are supposed to design systems. They think about scalability, reliability, security, maintainability. They establish patterns and guardrails. They consider edge cases: What happens when 10,000 users hit this endpoint simultaneously? How do we handle partial failures? What’s our data consistency model? How do we make this debuggable when something goes wrong at 3am? They create the “blueprints” that make the difference between software that works on localhost and software that works in production.

Programmers write code. They understand syntax, algorithms, design patterns. They know how to implement features, how to make things work. Given good specifications and architectural guidelines, they write solid, maintainable code that does exactly what it needs to do.

But here’s where we got confused: engineers also write code. They have to—you can’t design a system well if you don’t understand implementation realities. An architect who doesn’t understand how concrete and steel actually behave can’t design a building that stands. Similarly, a software engineer needs to understand how code actually executes.

Because of this overlap, we started treating them as the same job. Most companies just have “software engineers” who do everything—system design and implementation. We stopped distinguishing between the person who decides “we need a caching layer with these invalidation rules” and the person who implements Redis with that specification.

For years, this worked okay. The engineer-programmer would design the system in their head, then implement it. Both roles were happening, just inside one person.

Enter the AI Programmer

Now we have large language models that can write code. And here’s what I’ve realized: LLMs are incredible programmers, but terrible engineers.

An LLM is like that construction worker who can build you a skyscraper without blueprints. Ask it to “build me a todo list app,” and it will. The code will be clean. It’ll have proper separation of concerns. The variable names will make sense. It’ll work beautifully on your laptop.

But it’s missing all the engineering:

- No load considerations: It’ll use an in-memory array for the todo list, which works great for testing but fails when you have 10,000 users

- No failure handling: What happens when the database connection drops mid-request? The LLM’s code probably doesn’t handle that gracefully

- No security thinking: Authentication might be present, but is it actually secure against common attacks? Are there SQL injection vulnerabilities?

- No performance engineering: That nested loop through every user’s todos on every request? Didn’t consider that case

- No operational thinking: Good luck debugging when something goes wrong—there’s minimal logging and no monitoring

The code looks professional. It follows good practices. It even works, in the ideal case. But the moment reality hits—concurrent users, network failures, edge cases, malicious input—it falls apart.

Just like that skyscraper built without architects.

What This Actually Means

I used to think the distinction between “engineer” and “programmer” was just semantic nitpicking. Now I realize it’s crucial, and we’re about to learn this lesson the hard way.

When you tell an LLM “build me an authentication system,” you’re giving it a task without constraints. It’s like telling a construction worker “build me a building” without specifying:

- How many people it needs to support

- What kind of disasters it needs to withstand

- What the fire safety requirements are

- How the utilities should be routed

- What the budget constraints are

The LLM will build you something. That something might even impress you. But it won’t be robust, because nobody did the engineering.

Here’s a real example from my startup, Empath Legal. We’re building jury selection tools for attorneys. I could ask an LLM: “Create an API endpoint that analyzes jury questionnaires and returns insights.”

The LLM would build me something functional:

- It would parse the questionnaires

- It would call an LLM to analyze the text

- It would return formatted results

- The code would be clean and well-structured

But here’s what it wouldn’t consider without engineering input:

Business requirements I need to specify:

- These questionnaires can be 50+ pages and attorneys need results in under 30 seconds

- Our customers are lawyers who need citations for everything—we can’t just hallucinate insights

- The analysis must be deterministic enough that an attorney can rely on it in court

- We have a credit-based pricing model, so we need to track and limit usage

Technical guardrails I need to establish:

- We need to chunk large questionnaires intelligently (by section, not arbitrary token counts)

- We must implement streaming so attorneys see progressive results, not a 30-second blank screen

- We need a caching layer so re-analyzing the same questionnaire doesn’t burn credits

- We need robust error handling because LLM API calls fail sometimes

- We need audit trails for compliance reasons

System design decisions I need to make:

- Should this be synchronous or async? (Async, because of the 30-second requirement)

- What happens if the LLM call fails midway? (We need idempotent retries)

- How do we handle rate limits from the LLM provider? (We need a queue with backpressure)

- What’s our data consistency model? (We need to decide what happens if analysis partially completes)

The LLM could write beautiful code for the happy path. But without me doing the engineering work—understanding the requirements, establishing the constraints, designing the system architecture—that code would be worthless in production.

The New Division of Labor

This isn’t about LLMs being bad. They’re phenomenal at what they do. This is about understanding what they do.

LLMs are expert programmers: Give them a well-specified task with clear constraints, and they’ll implement it beautifully. They know patterns, they write clean code, they can even debug and optimize within a defined scope.

Humans need to be the engineers: We need to understand the business requirements, design the system architecture, establish the guardrails and principles, consider the edge cases and failure modes. We need to ask: What are we really trying to achieve? What could go wrong? How does this need to scale?

This is actually liberating, if you think about it. We can offload the tedious parts—writing boilerplate, implementing standard patterns, translating specifications into code—and focus on the interesting parts: understanding user needs, designing robust systems, making architectural tradeoffs.

But only if we actually do the engineering work. If we just prompt “build me X” without providing context, constraints, and architectural guidance, we get that beautiful skyscraper that nobody can live in.

How to Actually Work with AI Coding Tools

Here’s what I’ve learned about working effectively with LLMs:

Bad prompt:

“Build me a payment system for my SaaS app”

Good prompt:

“Build me a payment system with these requirements:

- Support credit-based pricing (users buy credits, features consume credits)

- Must handle concurrent credit deductions safely (two API calls shouldn’t both succeed if there’s only enough credits for one)

- Need audit trail for billing disputes

- Should fail gracefully if payment processor is down (degrade to cached credit counts, log for reconciliation)

- Performance requirement: credit check must add < 50ms to request latency

Use PostgreSQL with row-level locking for credit deductions. Implement idempotent credit operations so retries don’t double-charge. Include comprehensive logging for debugging billing issues.”

The second prompt does the engineering work. It specifies:

- The business model (credit-based pricing)

- The critical constraint (safe concurrent access)

- The compliance requirement (audit trail)

- The reliability requirement (graceful degradation)

- The performance requirement (latency budget)

- The technical approach (PostgreSQL with row locks)

- The operational requirement (debuggable)

Now the LLM can write code that actually works in production, because I’ve given it the architectural blueprint.

The Uncomfortable Truth

Most of us who’ve been writing code for years have been doing both roles simultaneously for so long that we don’t even notice when we’re switching hats. When we sit down to “code,” we’re actually doing engineering work (thinking about requirements and constraints) and then programming work (implementing the solution).

With LLMs, we can’t do that anymore. The programming part gets delegated to the AI. But if we don’t explicitly do the engineering part ourselves—if we just treat the LLM as a magic “build me X” box—we get code that works in the demo but fails in reality.

And here’s the uncomfortable part: many people who call themselves engineers are actually just programmers. They’re really good at writing code, but they’ve never had to think deeply about system design because they were implementing someone else’s architecture, or working on small enough systems that the complexity didn’t matter.

With LLMs, this distinction now matters. The code-writing skills that used to be valuable are being commoditized. What’s becoming valuable is the engineering thinking: understanding requirements, designing robust systems, making good architectural tradeoffs, considering failure modes.

What This Means For You

If you’re learning to code right now, don’t just learn syntax and frameworks. Learn to think like an engineer:

- When you build something, ask: “What happens when 1000 people use this simultaneously?”

- Before writing code, ask: “What are all the ways this could fail, and how should it handle each one?”

- Study real-world system design, not just toy examples

- Learn to write specifications that would let someone else implement your vision correctly

- Understand the why behind patterns, not just the how

If you’re already working as a developer, pay attention to which role you’re actually performing. Are you mostly implementing features based on specs? You’re in the programmer role, and LLMs are coming for that job. Start developing engineering skills: system design, requirements analysis, architectural thinking.

If you’re hiring, stop asking “can you code fizzbuzz?” and start asking “design a URL shortener that handles 1000 requests/second and explain your failure modes.” The code-writing part is increasingly automated. The thinking part isn’t.

The Punchline

Here’s the irony: I wrote this post with Claude’s help. Not because I couldn’t write it myself, but because Claude is a better programmer than I am. I gave it my core idea, my architectural guidelines (the blog writing guide), my requirements (make it engaging and human), and my constraints (use the construction analogy, include real examples).

Claude wrote beautiful prose. But I did the engineering: I decided what to communicate, how to structure the argument, what examples would land, what the reader needs to understand.

The future of software development isn’t “no human involved.” It’s humans doing what humans are uniquely good at—understanding messy real-world requirements, making tradeoffs with incomplete information, designing robust systems for complex environments—while AI does what it’s uniquely good at: writing clean, correct code that implements those specifications.

But only if we remember to do the engineering work. Otherwise, we’re just building skyscrapers without architects.

And we all know how that story ends.

What’s your experience with AI coding tools? Are you finding yourself doing more engineering thinking, or just prompting and hoping? I’d love to hear your stories—the spectacular failures are often more educational than the successes.

(Written by Human, improved using AI where applicable.)