If you’ve ever wondered how some new language models are now chewing through entire books, transcripts, or even long-winded technical docs without running out of memory or context—you’re not alone. The answer isn’t magic; it’s something called Ring Attention, and it’s a bit like organizing a messy desk with infinite post-its, a whiteboard, and a photographic memory that just happens to forget the boring stuff.

Let’s break it down in human terms, one step at a time.

What Problem Is Ring Attention Solving?

Standard transformer models do something clever but computationally expensive: every word (token) looks at every other word in the sequence. This is known as full self-attention, and while it’s powerful, it also scales quadratically. In plain speak: the longer the input, the slower and more memory-hungry it gets.

That’s fine if you’re summarizing tweets, but what if your model needs to:

- Read a thousand-page deposition transcript,

- Digest multiple research papers,

- Or just follow a complex conversation with tangents and callbacks?

Enter Ring Attention. It’s a solution designed to handle long-context reasoning without melting your GPU.

A Real-World Analogy: Your Brain at a Dinner Party

Imagine you’re at a 3-hour dinner party (lucky you) with deep conversations happening around the table.

- You remember the last few things said really well (like a sliding window of attention).

- You summarize earlier parts of the evening in your head: “We talked about AI, then politics, then pizza preferences.”

- You tune out the boring bits and only remember the key takeaways.

- And eventually, some things just fade—unless something or someone makes them suddenly relevant again.

Ring Attention mimics that. Let’s go over the components that make it work.

The Three Building Blocks of Ring Attention

1. Sliding Window Attention (aka Short-Term Memory)

This part is pretty straightforward. The model focuses strongly on the most recent k tokens—think of it like reading the last paragraph to stay coherent while writing.

For instance:

“The chef prepared a complex dish with saffron, truffle oil, and other rare ingredients that delighted the guests.”

When generating the word “delighted,” the model mostly attends to “ingredients that.” That’s your short-term memory.

2. Compressed Memory (Summarizing the Past)

But what about the earlier parts of the conversation or document? Instead of tossing them out, the model creates summarized representations of those older chunks. These aren’t raw tokens—they’re compressed vectors, almost like bullet-point summaries.

From the earlier sentence, this might be compressed into:

“chef + complex + rare ingredients”

This lets the model recall key ideas without needing every word. It’s like folding a page into a sticky note with just the headline.

3. Selective Token Referencing (Prioritizing What Matters)

Not every word is worth remembering. Some words are semantically rich (“truffle oil”), and others are just filler (“with”, “and”).

Ring Attention lets the model choose what’s worth storing long-term. It might use attention scores or learned heuristics to tag the good stuff for memory.

Think of it like highlighting passages in a book. You don’t mark everything—just the juicy parts.

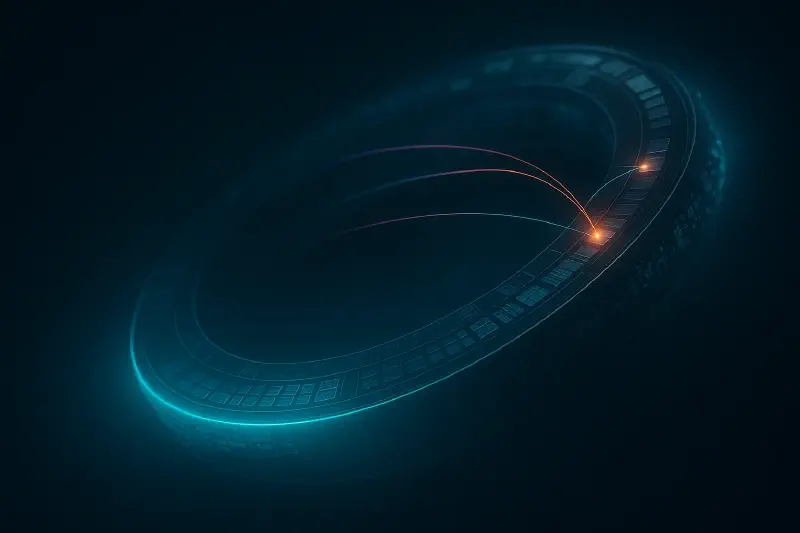

The Ring Part: Why Is It Called That?

Here’s the elegant twist: this attention memory isn’t just a one-way list. It’s stored in a circular buffer—like a ring. This means:

- Memory space stays constant regardless of input length.

- Older compressed tokens get evicted as new ones enter.

- But the ring lets the model keep scanning the most relevant parts as needed.

Kind of like a sushi conveyor belt: important dishes (tokens) stay longer, and boring ones just spin off the belt.

So What Can This Actually Do?

Models with Ring Attention can:

- Read entire books and refer back to Chapter 1 while summarizing Chapter 10.

- Handle extremely long transcripts, support tickets, legal docs, or codebases.

- Follow narratives with callbacks, subtle patterns, or recurring characters/events.

That’s why models like Gemini 1.5 and Claude 2.1+ advertise context windows with millions of tokens—and actually use them meaningfully.

A Quick Summary for the TL;DR Crew

- Ring Attention = sliding window + compressed memory + selective referencing.

- Keeps the most important parts of long documents accessible without high memory cost.

- Works kind of like human memory: recent stuff is detailed, older stuff is summarized, and only the highlights get remembered.

- Enables next-gen LLMs to scale to massive contexts.

Still Curious? Some Open Questions

- How do we make compression even smarter—can the model summarize like a human?

- Could different types of memory (episodic vs semantic) make Ring Attention more modular?

- Is there a hybrid between full attention and ring that gives us the best of both?

If you’re building models, or just fascinated by how they work, these questions are wide open—and very much worth exploring.

Happy hacking 🧠